Euromed Marseille School of Management, World Med MBA Program - Information Systems and Strategy Course

You are here: Information Systems and Strategy, Session 2, The Software Crisis

[Return to Session 2]

The earliest computing machines had fixed programs and forced the operator to change their physical layout to alter the program. These computers were not so much "programmed" as "designed". "Reprogramming" was a manual process, starting with flow charts and paper notes, followed by detailed engineering designs, and then you had to re-wire, or even re-structure, the whole machine.

The development of the von Neumann machine and stored programming languages during the 1950s eased this problem somewhat, however the emphasis remained firmly on the design of the hardware. Most machines struggled to function reliably for more than a few minutes at a time and computer scientists devoted the bulk of their efforts to solving these problems. By comparison, the programming of the machine was now regarded as a relatively straightforward exercise. However as hardware became cheaper, more reliable and more complex, and the programs that could be run on the machines became more elaborate, people began to talk about a new set of problems, the problems of software rather than the problems of programs or hardware.

Before the 1960s, separate notions of 'programs' and 'software' did not exist. However, during the 1960s, the use of the term 'software' become more common. The split between 'software' and 'programs' first occurred with the increasing importance of non-numerical computation, especially data processing in businesses applications. The old ways of doing things had started to become less effective at providing solutions to these new problems - even machine-oriented problems such as storing data now needed to take into account the world outside the computer.

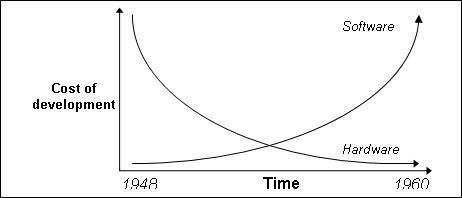

By the end of the 1960s, hardware costs had fallen exponentially, and were continuing to do so, while the cost of software development was rising at a similar rate.

The apparent problem of incomplete, poorly performing software became referred to as "the software crisis". Until this time, the attitude of many of the people involved in the computer industry had been to treat computers as almost a craft industry. Computers (both software and hardware) were created as 'one-off' items for particular applications. One of the pioneers of programming, Ed Dijkstra, recalled,

"Programming started out as a craft which was practised intuitively. By 1968, it began to be generally acknowledged that the methods of program development followed so far were inadequate... After the methods then employed had been identified as fundamentally inadequate, a style of design was developed in which the program and its correctness ... were designed hand in hand. This was a dramatic step forward."

With the emergence of large and powerful general purpose mainframes (such as the IBM 360) large and complex systems became possible. People began to ask why project failures, lost overruns and so on were so much more than with other large projects and, in the late 1960s the new discipline of Software Engineering was born. Now, for the first time, the creation of software was treated as a discipline in its own right, demanding as much reasoning and consideration as the hardware on which it was to run.

As programs and computers became part of the business world, so their development moved out of the world of bespoke craft work and became a commercial venture; the buyers of software increasingly demanded a product that was built to a high quality, on time and within budget. Many large systems of that time were seen as absolute failures - either they were abandoned, or did not deliver any of the anticipated benefits.

A number of fundamental problems with the process of software development were identified:

Euromed Marseille Ecole de Management, World Med MBA Programme - Information Systems and Strategy Course

[Return to Session 2]